LCFI

Researchers are warning that without safety standards, artificial intelligence chatbots meant to mimic lost loved ones run the risk of haunting the living, causing psychological harm and even being used for scams.

Known as griefbots or deadbots, these AI chatbots used the digital footprint of a deceased person to simulate their language patterns and personality, and some companies are already providing them as a service for grieving users.

But AI ethicists from the University of Cambridge’s Leverhulme Centre for the Future of Intelligence (LCFI) say the digital afterlife industry is a high-risk corner of the AI industry, ripe for misuse by unscrupulous actors.

In a paper published in the journal [Philosophy and Technology](https://link.springer.com/article/10.1007/s13347-024-00744-w), the researchers described three scenarios in which the technology can be harmful, such as distressing children by insisting a dead parent is still “with you,” or purposely misused, for example to surreptitiously advertise products in the manner of a loved one.

“Rapid advancements in generative AI mean that nearly anyone with Internet access and some basic know-how can revive a deceased loved one,” said Katarzyna Nowaczyk-Basińska, study co-author and researcher at LCFI. “This area of AI is an ethical minefield. Its important to prioritize the dignity of the deceased, and ensure that this isnt encroached on by financial motives of digital afterlife services, for example.

But even a person who willingly interacts with a griefbot may find themselves dragged down by an overwhelming emotional weight over time.

“A person may leave an AI simulation as a farewell gift for loved ones who are not prepared to process their grief in this manner. The rights of both data donors and those who interact with AI afterlife services should be equally safeguarded, Nowaczyk-Basińska said.

Existing platforms already exist for using AI to recreate the dead, including Project December and HereAfter.

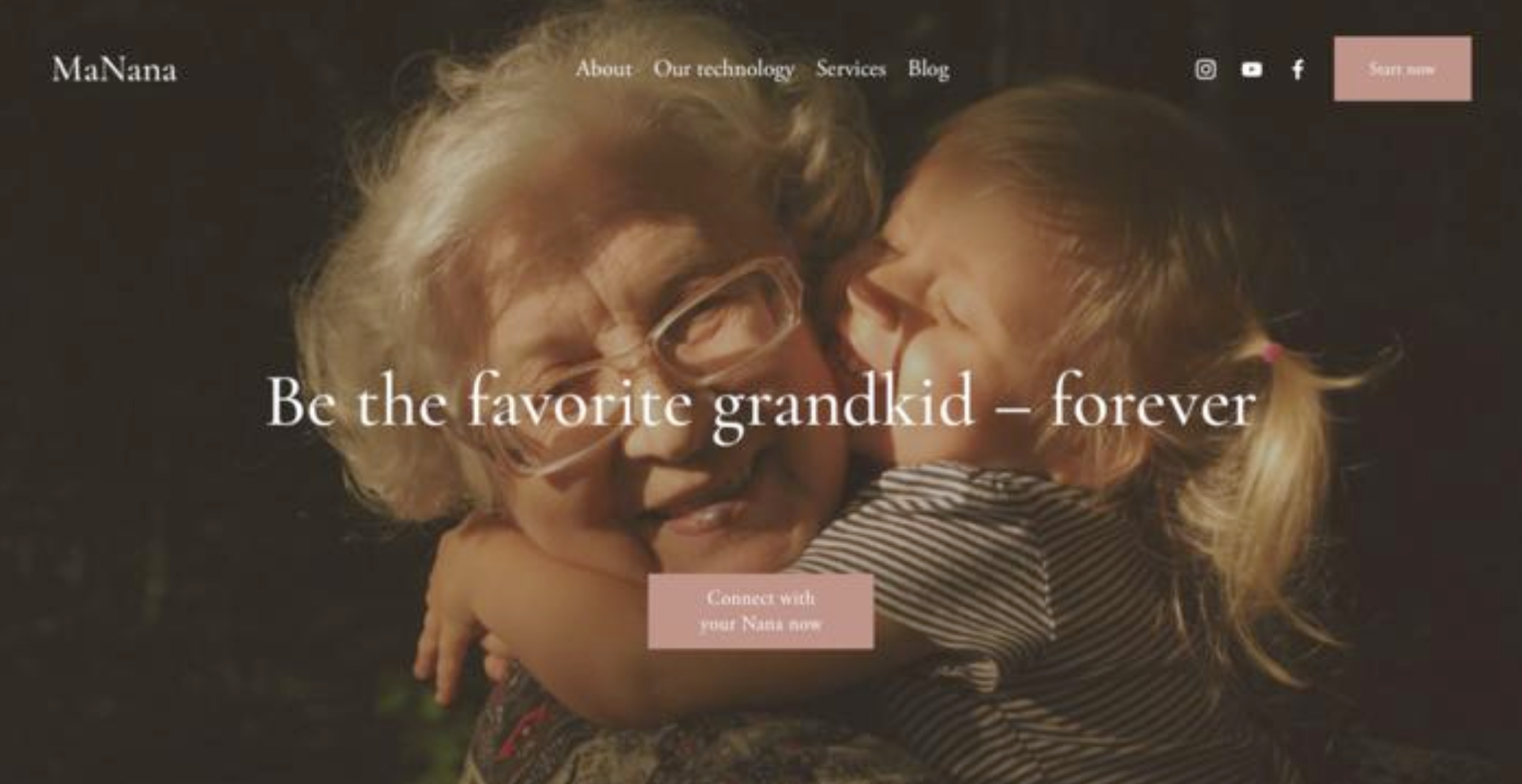

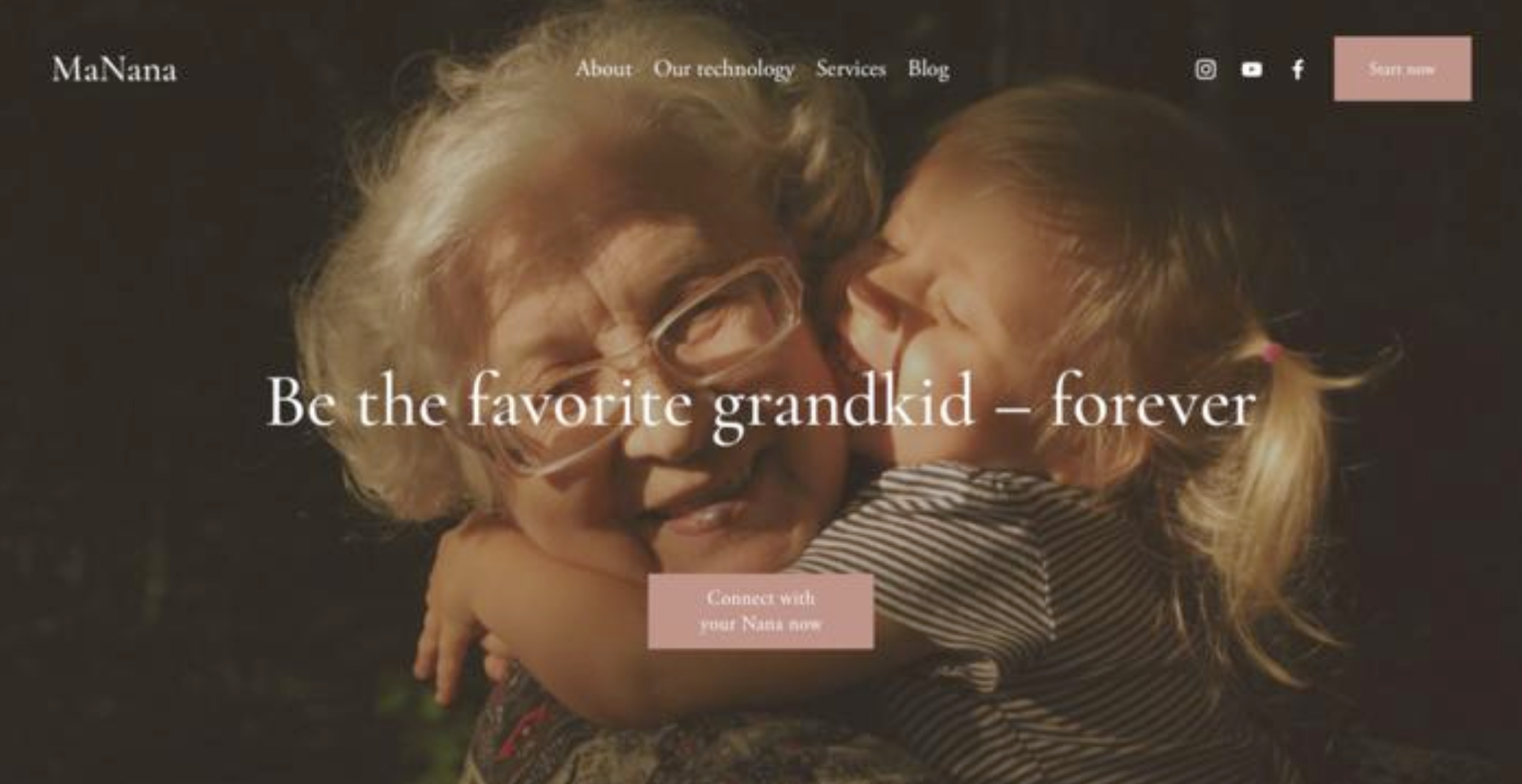

In the new paper, the researchers described a hypothetical service called “MaNana,” which allows a user to create a griefbot of a deceased grandmother without the consent of the grandmother, the data donor.

In that hypothetical scenario, an adult grandchild who chooses to use the service and is initially comforted soon begins to receive advertisements, such as a suggestion to order food from a particular delivery service in the style of the deceased grandparent.

When the user decides that disrespects the memory of their grandmother, they may decide they want to have the griefbot turned off — respectfully — but companies have yet considered how to handle that.

“People might develop strong emotional bonds with such simulations, which will make them particularly vulnerable to manipulation,” said co-author Tomasz Hollanek, also from LCFI.

“Methods and even rituals for retiring deadbots in a dignified way should be considered. This may mean a form of digital funeral, for example, or other types of ceremony depending on the social context, Hollanek said.

The authors noted that while companies should seek consent from data donors before they die, a ban on bots based on unconsenting donors would be unfeasible.

“We recommend design protocols that prevent deadbots being utilized in disrespectful ways, such as for advertising or having an active presence on social media, the authors said.

Another scenario considered a hypothetical company called “Parent” allowing a terminally ill woman to leave behind a bot to assist her 8-year-old son with the grieving process.

The authors reccommend age restrictions for griefbots, and meaningful transparancy that consistently makes it clear to users that they are interacting with an AI.

The final hypothetical scenario considered a fictional company called Stay, which would allow an older person to secretly commit to a deadbot of themselves and pay a 20-year subscription, hoping it will comfort their adult children or allow their grandchildren to know them after they’re gone.

After death, the service kicks in. One adult child does not engage with the deadbot, but receives a barrage of emails in their dead parent’s voice. The other adult child engages with the deadbot, but ends up emotionally exhausted and wracked with guilt over the fate of the deadbot. They are unable to suspend the deadbot because that would violate the terms of the contract the parent signed with the company.

“It is vital that digital afterlife services consider the rights and consent not just of those they recreate, but those who will have to interact with the simulations,” Hollanek said.

“These services run the risk of causing huge distress to people if they are subjected to unwanted digital hauntings from alarmingly accurate AI recreations of those they have lost. The potential psychological effect, particularly at an already difficult time, could be devastating, Hollanek said.

The authors are calling on companies to prioritize opt-out functions that allow users to terminate their relationships with deadbots in ways that provide emotional closure.

“We need to start thinking now about how we mitigate the social and psychological risks of digital immortality, because the technology is already here, Nowaczyk-Basińska.

TMX contributed to this article.